A Look at How Deepfakes Work

It's no April Fools' joke---deepfakes are a real concern. This misleading practice, which inserts fake faces or speech into videos or photographs, is becoming more sophisticated in its precision. The rising capabilities of the technology behind deepfakes are making them easier to produce and harder to detect. Exactly how do deepfakes work? Here's a look at the machine learning tools that create them.

Neural Networks: the First Connections

Neural networks, patterned after the neurons of our own brains, were first conceptualized way back in 1943. Innovative thinkers considered how biological networks of the brain work and how this might become a model for artificial intelligence. By 1975---before most people were even using computers---the first neural network was developed. These early roots were rudimentary, but the years between then and now have led to advanced technology consisting of several types of neural networks.

Like the synaptic pathways in the brain, neural networks react to stimuli, pass it through a series of nodes that process it, and create a response or output. Over time, more exposure to similar stimuli strengthens certain pathways and leads to the pruning away of others. (For example, the network learns that eyes take a fairly predictable shape and are always located under eyebrows; this understanding is perpetually reinforced, and any initial confused idea that eyes and eyebrows are arranged differently becomes discarded.) In terms of the deepfake process, this allows for increasingly precise facial recognition, which then sets the stage for facial creation.

Deep Learning: More Layers, More Accuracy

When the layers of connections in a neural network reach a certain level of abundance and complexity, the system becomes a deep learning structure. Such a system can process massive amounts of input data, fine-tuning its understandings each time. Moreover, it has the capacity to do this automatically, without requiring a human's manual labeling of images or videos. (To return to the brain comparison, its experience causes it to learn on its own, as a developing baby would: there is no need for a parent to say, "These are my eyes," or "My mouth makes these sounds." Such understandings take place automatically.)

The advances of deep learning have led to computer recognition capabilities that can power all sorts of technologies: automated driving, medical diagnoses, fraud detection, and home assistants are a few of them. But it also provides greater possibility to make convincing deepfakes---a term that comes from the combination of deep learning and fakes. With machines able to learn facial and audio patterns better and faster on their own, the construction of fake material becomes that much easier for those who want to use it.

GANs: the Superhighway to Deepfakes

Perhaps the biggest stride of all in making undetectable deepfakes was the development of generative adversarial networks, or GANs. Machine learning researcher Ian Goodfellow came up with this method in 2014. It bridges the capability of detecting facial patterns with that of actually creating them. What sets a GAN apart is that it consists of two neural networks, both already trained in deep learning recognition. The first one, called the generator, has the task of creating fake media; the second, the discriminator, must determine whether this media is real or not. It's a game of dueling machines, but it's rigged: the game continues until the discriminator is no longer able to tell when the generator gives it something fake. At this point, the media is qualified for photo or video sharing amongst humans, who tend to be pretty bad discriminators themselves.

What Can We Do About Deepfakes?

The unfortunate result of these machine learning capabilities is material that can influence anything from the public perception of celebrities to political events. So what do we do when we can no longer discriminate the real from the fake? Here are a few ideas:

- Detect the less refined deepfakes that actually can be spotted. While the technology is getting more sophisticated, there's still more amateur software out there that produces detectable fakes. At least highlighting these ones, with something like Video Authenticator, can curb part of the problem.

- Consider the media's source and path. Along the lines of Project Origin, which verifies a video's original source and any modifications it's gone through, viewers should consider where a video (or image) comes from and has been passed along. Is the publisher a reliable source? Is the platform a place where people typically share trustworthy news?

- Sharpen our social and critical thinking skills. Beginning to think more critically about the media we consume is a good habit to develop. Does the content make logical sense? How does it compare to other related material? As Ian Goodfellow himself suggested, learning how to assess misleading claims should become a regular part of educational curriculum, even starting with kids.

- Pursue or support regulation of fabricated media. Deepfakes are in a tricky gray space between freedom of speech and unfair manipulation. While this is difficult terrain, it would be wise for society to move toward greater security, transparency, and verified information. This can take the form of broadly recognized standards, initiatives, or government legislation.

Stay connected. Join the Infused Innovations email list!

Share this

You May Also Like

These Related Posts

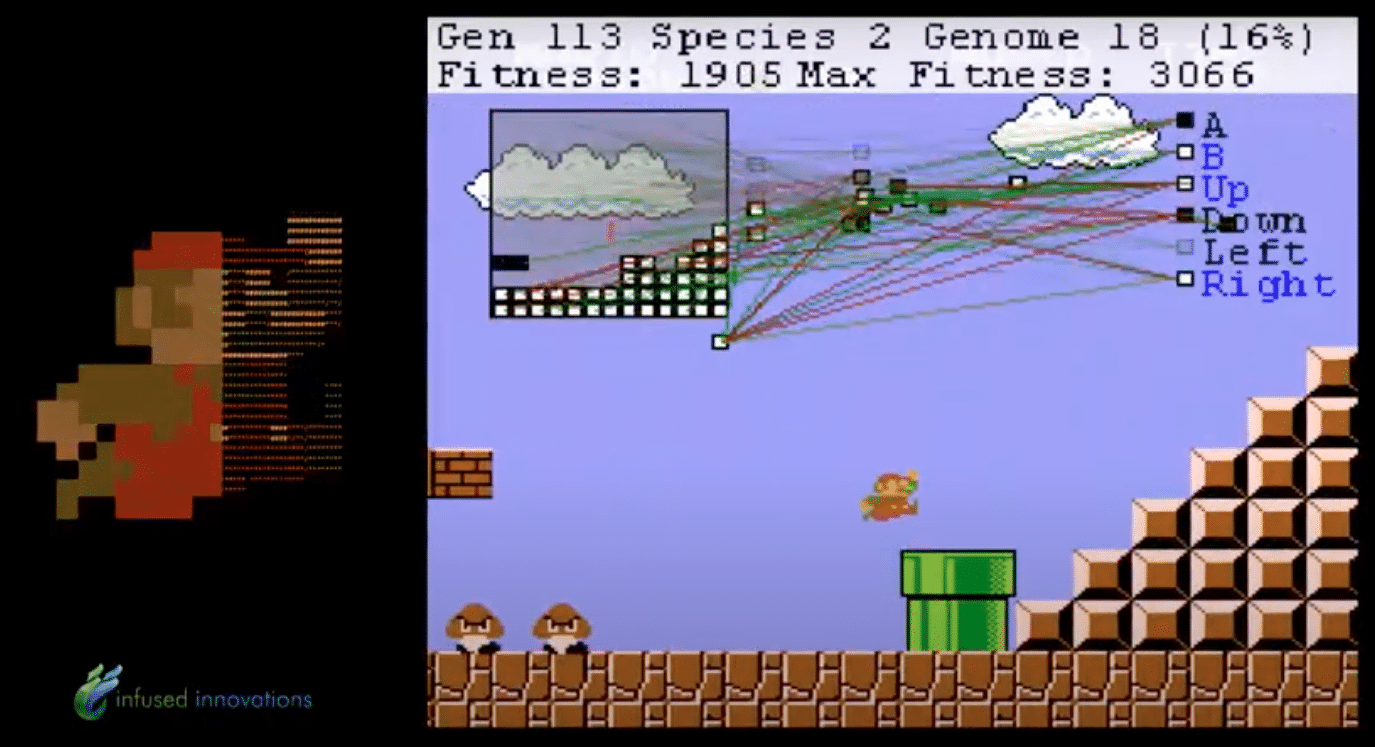

Using Machine Learning to Beat a Video Game

5 Big Highlights from Microsoft Build 2023

No Comments Yet

Let us know what you think